Ollama

It is a tool that simplifies running open-source large language models (LLMs) locally on your computer, acting as a model manager and runtime, allowing you to interact with these models without relying on cloud services.

- Download ollama from https://ollama.com/ and setup locally.

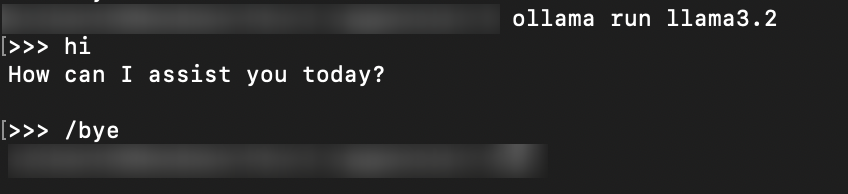

- Once the download is complete, run the following command to pull the required LLM models and run the model.

ollama pull llama3.2

ollama run llama3.2

- Olllama server will run on http://localhost:11434/

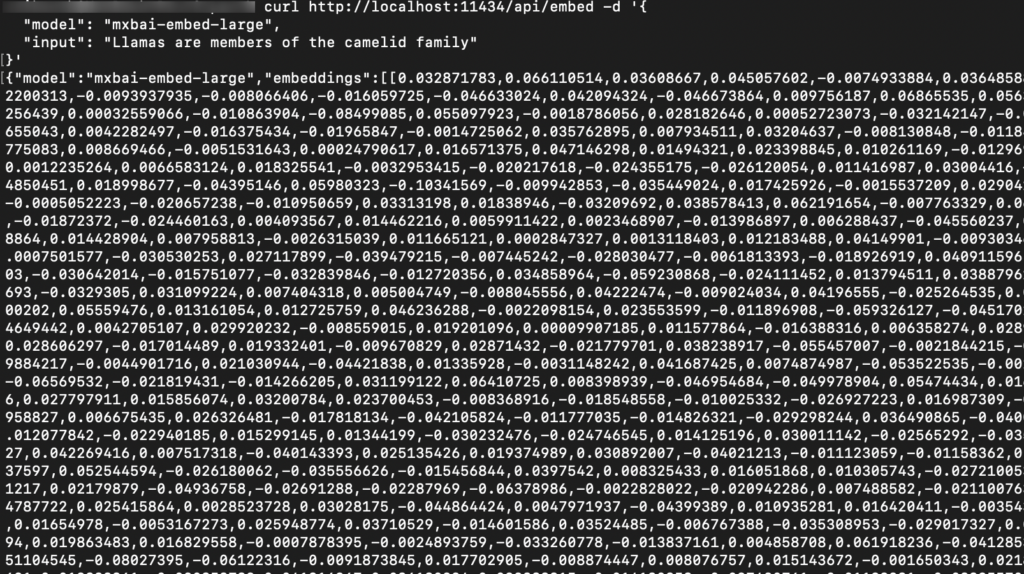

- Run the following command to pull the embedding model. Note that generation is not supported, so no additional steps are required.

ollama pull mxbai-embed-large:latest- Verify that the embedding is running correctly by using the following curl command

curl http://localhost:11434/api/embed -d '{

"model": "mxbai-embed-large",

"input": "Llamas are members of the camelid family"

}'

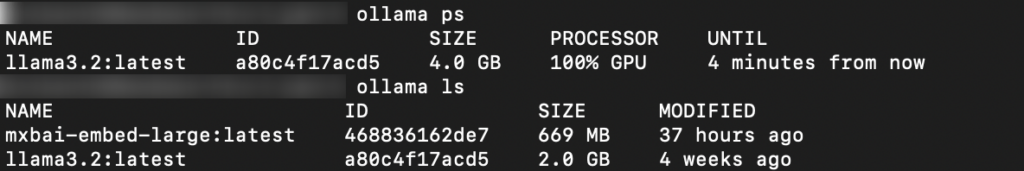

- Ollama commands

ollama ps

ollama ls

- I have successfully run this on a Mac without any issues, and the configurations used are as follows

Chip: Apple M1 Mac Pro

Memory: 16GB

macOs: Sonoma 14.5Qdrant

Qdrant is a cutting-edge, open-source vector database that empowers high-performance and massive-scale AI applications. Built from the ground up in Rust, Qdrant delivers unparalleled speed and reliability, effortlessly handling billions of high-dimensional vectors.

Qdrant is a specialized database that allows you to store, search, and manage large datasets of dense vectors, which are commonly used in machine learning and artificial intelligence applications. It is particularly well-suited for use cases such as:

Machine learning: Qdrant is designed to work seamlessly with machine learning frameworks and libraries, making it an ideal choice for building and deploying scalable machine learning models.

Similarity search: Qdrant enables fast and efficient similarity search, which is critical for applications such as image and video search, recommendation systems, and natural language processing.

Nearest neighbor search: Qdrant provides fast and accurate nearest neighbor search, which is essential for applications such as clustering, classification, and anomaly detection.

Here are steps to setup cloud Qdrant account and basic setup

- To get started, sign up for a free cloud account on Qdrant. Visit https://qdrant.tech/ and click on the ‘Log in’ button to begin the setup process

- Choose your preferred account login method; in this example, we’ll be using Google.

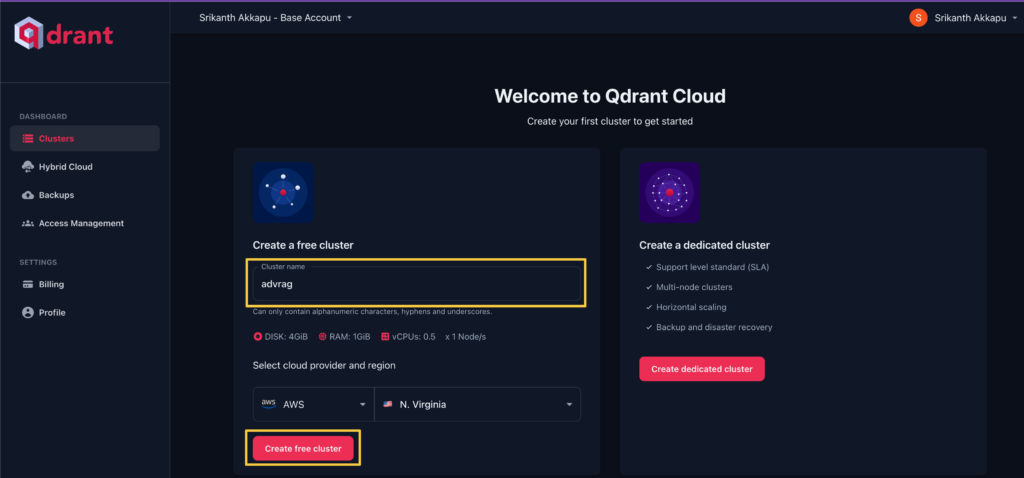

- “After logging in, enter a cluster name and click on ‘Create free cluster’ to proceed.

- Copy and store the API key and CURL command for future reference. Note that the API key is required to access the dashboard page, while the endpoint can be found on the cluster details page.

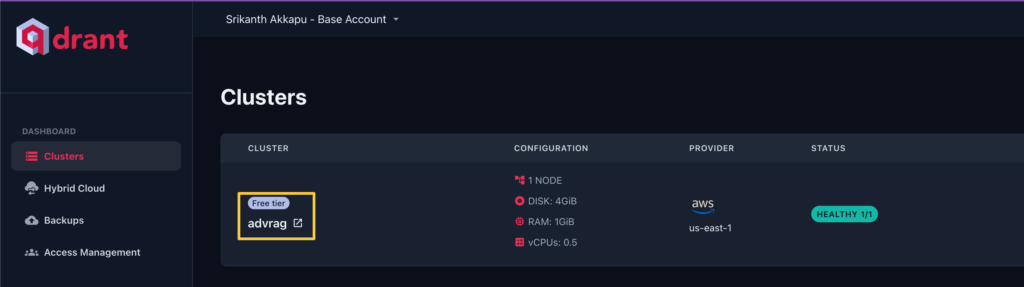

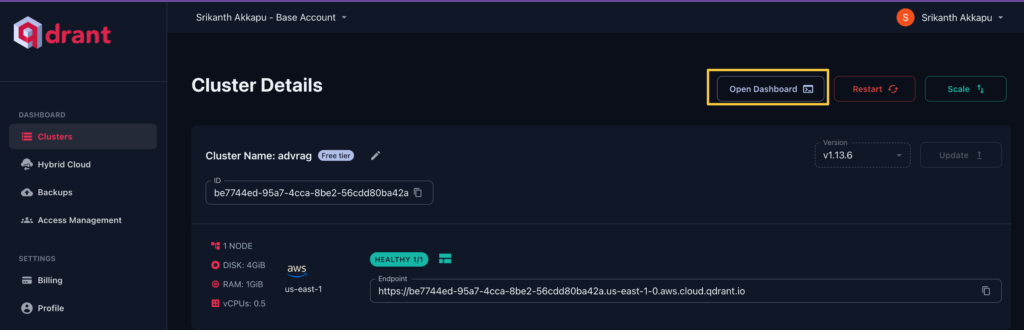

- o access the cluster details, click on the cluster you just created.

- You are now on the cluster details page. Click the ‘Open Dashboard’ button to access the dashboard and explore your cluster’s features and settings

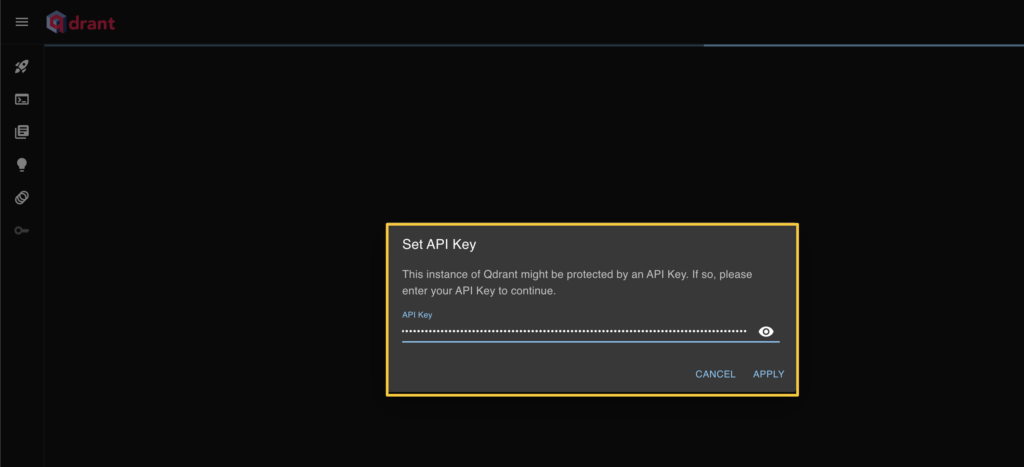

- Enter the API key that you copied earlier in the prompted field.

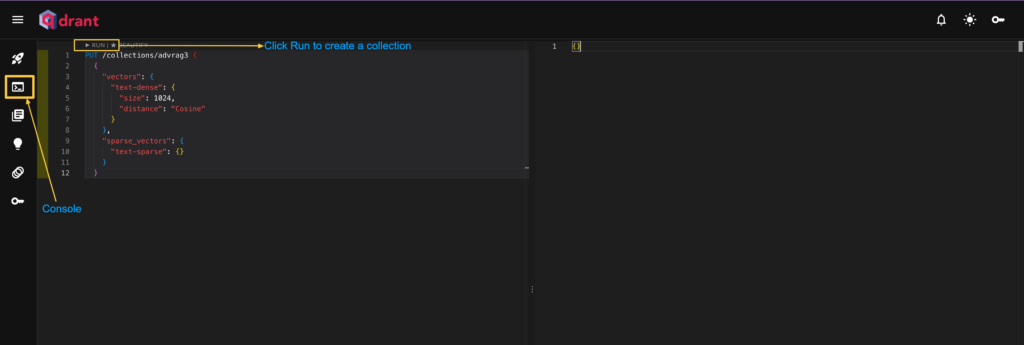

- Next, open a console and run the following command to create a new collection.

PUT /collections/advrag3 {

{

"vectors": {

"text-dense": {

"size": 1024,

"distance": "Cosine"

}

},

"sparse_vectors": {

"text-sparse": {}

}

}

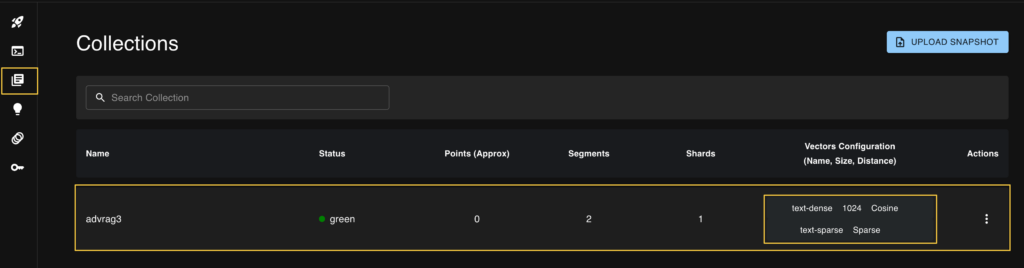

- Click on the collections tab

Conclusion:

We have now completed the step-by-step setup for both Ollama and Qdrant. To run Ollama locally, configure the LLM and embedding models, and ensure you meet the minimum system requirements. For Qdrant, you can choose to run it locally using Docker or use the cloud setup, as demonstrated in this blog. Once both systems are up and running, you can proceed to the Advanced RAGexercise by following the link below..

Leave a Reply